By ALEX ROSENBERG and WILLIAM EGGINTON

The Stone is a forum for contemporary philosophers on issues both timely and timeless.

In separate posts at The Stone in recent weeks, Alex Rosenberg and William Egginton set out their views on, among other things, naturalism, literature and the types of knowledge derived from science and from the arts. In the following exchange, they further stake out their positions on the relationship between the humanities and the sciences.

Galileo’s Gambit By Alex Rosenberg

Can neurophilosophy save the humanities from hard science? Hard science — first physics, then chemistry and biology, got going in the 1600s. Philosophers like Descartes and Leibniz almost immediately noticed its threat to human self-knowledge. But no one really had to choose between scientific explanations of human affairs and those provided in history and the humanities until the last decades of the 20th century. Now, every week newspapers, magazines and scientific journals report on how neuroscience is trespassing into domains previously the sole preserve of the interpretive humanities. Neuroscience’s explanations and the traditional ones compete; they cannot both be right. Eventually we will have to choose between human narrative self-understanding and science’s explanations of human affairs. Neuroeconomics, neuroethics, neuro-art history and neuro-lit-crit are just tips of an iceberg on a collision course with the ocean liner of human self-knowledge.

Let’s see why we will soon have to face a choice we’ve been able to postpone for 400 years.

It is hard to challenge the hard sciences’ basic picture of reality. That is because it began by recursively reconstructing and replacing the common beliefs that turned out to be wrong by standards of everyday experience. The result, rendered unrecognizable to everyday belief after 400 years or so, is contemporary physics, chemistry and biology. Why date science only to the 1600s? After all, mathematics dates back to Euclid, and Archimedes made empirical discoveries in the third century B.C. But 1638 was when Galileo first showed that a little thought is all we need to undermine the mistaken belief that neither Archimedes nor Aristotle had seen through but that stood in the way of science.

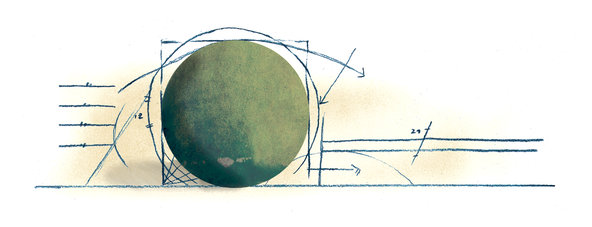

Galileo offered a thought-experiment that showed, contrary to common beliefs, that objects can move without any forces pushing them along at all. It sounds trivial and yet this was the breakthrough that made physics and the rest of modern science possible. Galileo’s reasoning was undeniable: roll a ball down an incline, it speeds up; roll it up an incline, it slows down.

So, if you roll it onto a frictionless horizontal surface, it will have to go forever. Stands to reason, by common sense. But that simple bit of reasoning destroyed the Aristotelian world-picture and ushered in science. Starting there, 400 years of continually remodeling everyday experience has produced a description of reality incompatible with common sense; that reality includes quantum mechanics, general relativity, natural selection and neuroscience.

Descartes and Leibniz made important contributions to science’s 17th-century “take off.” But they saw exactly why science would be hard to reconcile with historical explanation, the human “sciences,” the humanities, theology and in our own interior psychological monologs. These undertakings trade on a universal, culturally inherited “understanding” that interprets human affairs via narratives that “make sense” of what we do. Interpretation is supposed to explain events, usually in motivations that participants themselves recognize, sometimes by uncovering meanings the participants don’t themselves appreciate.

Natural science deals only in momentum and force, elements and compounds, genes and fitness, neurotransmitters and synapses. These things are not enough to give us what introspection tells us we have: meaningful thoughts about ourselves and the world that bring about our actions. Philosophers since Descartes have agreed with introspection, and they have provided fiendishly clever arguments for the same conclusion. These arguments ruled science out of the business of explaining our actions because it cannot take thoughts seriously as causes of anything.

Descartes and Leibniz showed that thinking about one’s self, or for that matter anything else, is something no purely physical thing, no matter how big or how complicated, can do. What is most obvious to introspection is that thoughts are about something. When I think of Paris, there is a place 3000 miles away from my brain, and my thoughts are about it. The trouble is, as Leibniz specifically showed, no chunk of physical matter could be “about” anything. The size, shape, composition or any other physical fact about neural circuits is not enough to make them be about anything. Therefore, thought can’t be physical, and that goes for emotions and sensations too. Some influential philosophers still argue that way.

Neuroscientists and neurophilosophers have to figure out what is wrong with this and similar arguments. Or they have to conclude that interpretation, the stock in trade of the humanities, does not after all really explain much of anything at all. What science can’t accept is some “off-limits” sign at the boundary of the interpretative disciplines.

Ever since Galileo, science has been strongly committed to the unification of theories from different disciplines. It cannot accept that the right explanations of human activities must be logically incompatible with the rest of science, or even just independent of it. If science were prepared to settle for less than unification, the difficulty of reconciling quantum mechanics and general relativity wouldn’t be the biggest problem in physics. Biology would not accept the gene as real until it was shown to have a physical structure — DNA — that could do the work geneticists assigned to the gene. For exactly the same reason science can’t accept interpretation as providing knowledge of human affairs if it can’t at least in principle be absorbed into, perhaps even reduced to, neuroscience.

That’s the job of neurophilosophy.

This problem, that thoughts about ourselves or anything else for that matters couldn’t be physical, was for a long time purely academic. Scientists had enough on their plates for 400 years just showing how physical processes bring about chemical processes, and through them biological ones. But now neuroscientists are learning how chemical and biological events bring about the brain processes that actually produce everything the body does, including speech and all other actions. Research — including Nobel-prize winning neurogenomics and fMRI (functional magnetic resonance imaging) — has revealed how bad interpretation’s explanations of our actions are. And there are clever psychophysical experiences that show us that introspection’s insistence that interpretation really does explain our actions is not to be trusted. These findings cannot be reconciled with explanation by interpretation. The problem they raise for the humanities can no longer be postponed. Must science write off interpretation the way it wrote off phlogiston theory — a nice try but wrong? Increasingly, the answer that neuroscience gives to this question is “afraid so.”

Few people are prepared to treat history, (auto-) biography and the human sciences like folklore. The reason is obvious. The narratives of history, the humanities and literature provide us with the feeling that we understand what they seek to explain. At their best they also trigger emotions we prize as marks of great art.

But that feeling of understanding, that psychological relief from the itch of curiosity, is not the same thing as knowledge. It is not even a mark of it, as children’s bedtime stories reveal. If the humanities and history provide only feeling (ones explained by neuroscience), that will not be enough to defend their claims to knowledge.

The only solution to the problem faced by the humanities, history and (auto) biography, is to show that interpretation can somehow be grounded in neuroscience. That is job No. 1 for neurophilosophy. And the odds are against it. If this project doesn’t work out, science will have to face plan B: treating the humanities the way we treat the arts, indispensable parts of human experience but not to be mistaken for contributions to knowledge.

Alex Rosenberg is the R. Taylor Cole Professor and philosophy department chair at Duke University. He is the author of 12 books, including “The Philosophy of Science: A Contemporary Approach,” “The Philosophy of Social Science” and, most recently, “The Atheist’s Guide to Reality.”

The Cosmic ImaginationBy William Egginton

Do the humanities need to be defended from hard science?

They might, if Alex Rosenberg is right when he claims that “neuroscience is trespassing into domains previously the sole preserve of the interpretive disciplines,” and that “neuroscience’s explanations and the traditional ones compete; they cannot both be right.”

While neuroscience may well have very interesting things to say about how brains go about making decisions and producing different interpretations, though, it does not follow that the knowledge thus produced replaces humanistic knowledge. In fact, the only way we can understand this debate is by using humanist methodology — from reading historical and literary texts to interpreting them to using them in the form of an argument — to support a very different notion of knowledge than the one Professor Rosenberg presents.

In citing Galileo, Professor Rosenberg seeks to show us how a “little thought is all we need to undermine the mistaken belief neither Archimedes nor Aristotle had seen through but that stood in the way of science.” A lone, brave man, Galileo Galilee, defies centuries of tradition and a brutal, repressive Church armed just with his reason, and “that simple bit of reasoning destroyed the Aristotelian world-picture and ushered in science.” The only problem is that this interpretation is largely incomplete; the fuller story is far more complex and interesting.

Galileo was both a lover of art and literature, and a deeply religious man. As the mathematician and physicist Mark A. Peterson has shown in his new book, “Galileo’s Muse: Renaissance Arts and Mathematics,” Galileo’s love for the arts profoundly shaped his thinking, and in many ways helped paved the way for his scientific discoveries. An early biography of Galileo by his contemporary Niccolò Gherardini points out that, “He was most expert in all the sciences and arts, as if he were professor of them. He took extraordinary delights in music, painting, and poetry.” For its part, Peterson takes great delight in demonstrating how his immersion in these arts informed his scientific discoveries, and how art and literature prior to Galileo often planted the seeds of scientific progress to come.

Professor Rosenberg believes that Galileo was guided solely by everyday experience and reason in debunking the false idea that for an object to keep moving it must be subjected to a continuous force. But one could just as well argue that everyday experience would, and did, dictate some version of that theory. As humans have no access to a frictionless realm and can only speculate as to whether unimpeded motion would continue indefinitely, the repeated observations that objects in motion do come to a rest if left on their own certainly supported the standard theory. So if it was not mere everyday experience that led to Galileo’s discovery, what did? Clearly Galileo was an extraordinary man, and a crucial aspect of what made him that man was the intellectual world he was immersed in. This world included mathematics, of course, but it was also full of arts and literature, of philosophy and theology. Peterson argues forcefully, for instance, that Galileo’s mastery of the techniques involved in creating and thinking about perspective in painting could well have influenced his thinking about the relativity of motion, since both require comprehending the importance of multiple points of view.

The idea that the perception of movement depends on one’s point of view also has forebears in proto-scientific thinkers who are far less suitable candidates for the appealing story of how common sense suddenly toppled a 2000-year old tradition to usher modern science into the world. Take the poet, philosopher and theologian Giordano Bruno, who seldom engaged in experimentation and who, 30 years before Galileo’s own trial, refused to recant the beliefs that led him to be burned at the stake, beliefs that included the infinity of the universe and the multiplicity of worlds.

The theory of the infinity of the world was not itself new, having been formulated in various forms before Bruno, notably by Lucretius, whom Bruno read avidly, and more recently by Nicholas of Cusa. Bruno was probably the first, though, to identify the stars as solar systems, infinite in number, possibly orbited by worlds like our own.

Such a view had enormous consequences for the theory of motion. For the scholastics, the universe was finite and spherical. The circular motion of the heavens was imparted by the outermost sphere, which they called the primum mobile, or first mover, and associated with God. The cosmos was hence conceived of as being divided into an outer realm of perfect and eternal, circular motion, and an inner realm of corruptibility, where objects moved in straight lines. As the scholastics denied the existence of space outside the primum mobile, citing Aristotle’s dictum that nature abhors a vacuum, the motion of the outer cosmos could only be conceived of as occurring in relation to a motionless center.

When Bruno disputed that the cosmos had boundaries, however, he undermined the justification for assuming that the earth (or anywhere else, for that matter) was its center. From there it was but a short step to realizing that everything can equally be considered at rest or in motion, depending on your point of view. Indeed, this very reasoning animated Galileo’s thought, which he expressed in the literary form of the dialog, very much like Bruno did.

In Galileo’s magisterial “Dialogue Concerning the Two Chief World Systems,” his key argument for the diurnal rotation of the earth relies on proving that bodies in motion together will be indistinguishable from bodies at rest. “It is obvious, then, that motion which is common to many moving things is idle and inconsequential to the relation of these movables among themselves, nothing being changed among them, and that it is operative only in the relation that they have with other bodies lacking that motion, among which their location is changed. Now, having divided the universe into two parts, one of which is necessarily movable and the other motionless, it is the same thing to make the earth alone move, and to move all the rest of the universe, so far as concerns any result which may depend upon such movement.”

Galileo’s insight into the nature of motion was not merely the epiphany of everyday experience that brushed away the fog of scholastic dogma; it was a logical consequence of a long history of engagements with an intellectual tradition that encompassed a multitude of forms of knowledge. That force is not required for an object to stay in motion goes hand in hand with the realization that motion and rest are not absolute terms, but can only be defined relative to what would later be called inertial frames. And this realization owes as much to a literary, philosophical and theological inquiry as it does to pure observation.

Professor Rosenberg uses his brief history of science to ground the argument that neuroscience threatens the humanities, and the only thing that can save them is a neurophilosophy that reconciles brain processes and interpretation. “If this project doesn’t work out,” he writes, “science and the humanities will have to face plan B: treating the humanities the way we treat the arts, indispensable parts of human experience but not to be mistaken for contributions to knowledge.”

But if this is true, should we not then ask what neuroscience could possible contribute to the very debate we are engaged in at this moment? What would we learn about the truth-value of Professor Rosenberg’s claims or mine if we had even the very best neurological data at our disposal? That our respective pleasure centers light up as we each strike blows for our preferred position? That might well be of interest, but it hardly bears on the issue at hand, namely, the evaluation of evidence — historical or experimental — underlying a claim about knowledge. That evaluation must be interpretative. The only way to dispense with interpretation is to dispense with evidence, and with it knowledge altogether.

If I am right, then Professor Rosenberg’s view is wrong, and all the neuroscience in the world won’t change that.

William Egginton is Andrew. W. Mellon Professor in the Humanities and Chair of the Department of German and Romance Languages and Literatures at the Johns Hopkins University. His most recent book is “In Defense of Religious Moderation.”

See online: Bodies in Motion: An Exchange